Despite years of innovation, ophthalmic clinical trials continue to have obstacles, which can hamper the introduction of new treatments. Far too often, the operational foundation of clinical research uses antiquated workflows and tools. This is particularly problematic in endpoint collection, where manual, paper-based methods persist even for assessments where timing and standardization are critical. In our experience leading clinical development and building technology platforms, we have come to believe that one of the most impactful innovations in clinical trials comes from developing a modern, integrated approach to trial execution.

We write this from two vantage points: one of us as a technologist and founder of an AI-teammates-for-clinical-research platform company, and the other as a clinician-scientist with years of ophthalmology clinical trials experience. Our vision is a future where clinical research is enabled by AI-guided workflows that transform how ophthalmic endpoints are collected. This will lead to eliminating preventable variability and protocol deviations that too often compromise promising studies.

The variability problem in ophthalmic studies

Anyone who has led a dry eye disease (DED) trial knows that there is high variability in many of the commonly used endpoints. Tear breakup time (TBUT), a measure of tear film stability, is a common example in the dry eye world.

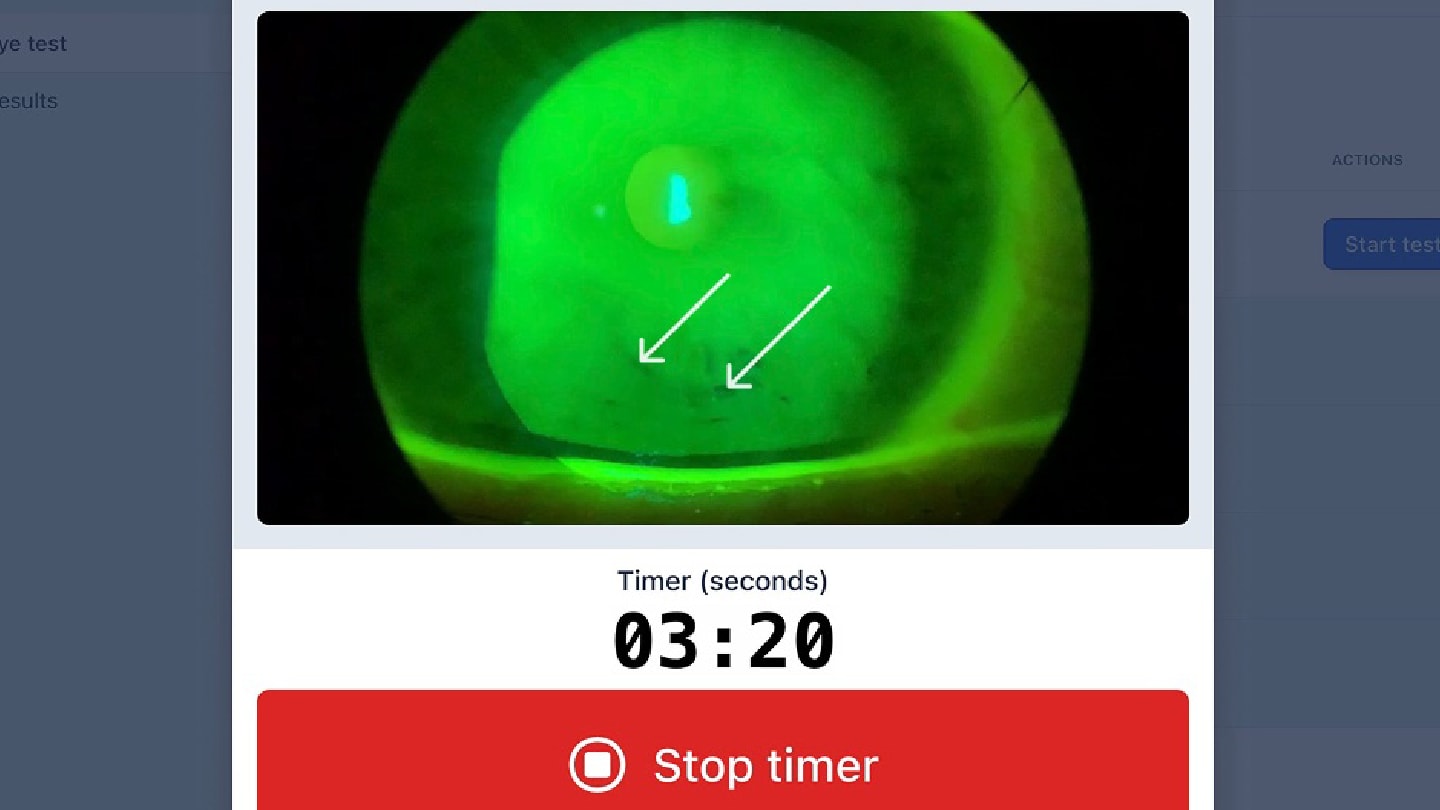

Even in 2025 this assessment still typically relies on the investigator's subjective judgment. For example, an investigator uses a slit lamp biomicroscope to view the eye during TBUT evaluation to determine the first time they notice a break in the participant’s tears. This test requires the investigator to accurately detect the breakup with their eyes and simultaneously time it with a stopwatch from when the tear film is renewed with a blink until when the tears break up. Each of these factors, in addition to others such as participant cooperation, create test viability.

At the scale of a multi-site trial, with dozens of investigators and hundreds of patients, this variability becomes not just a quality issue, but a financial one as well. Poorly standardized assessments lead to noisy data, larger overall sample sizes, and a smaller likelihood of finding significant differences between treatment groups at the end of the trial.

A new approach: embedded training and real-time validation

There is a better approach. Imagine deploying AI-driven guided endpoint modules within an integrated platform that both support data capture and also actively walks investigators through each assessment step, enforcing timing windows, and recording exact durations.

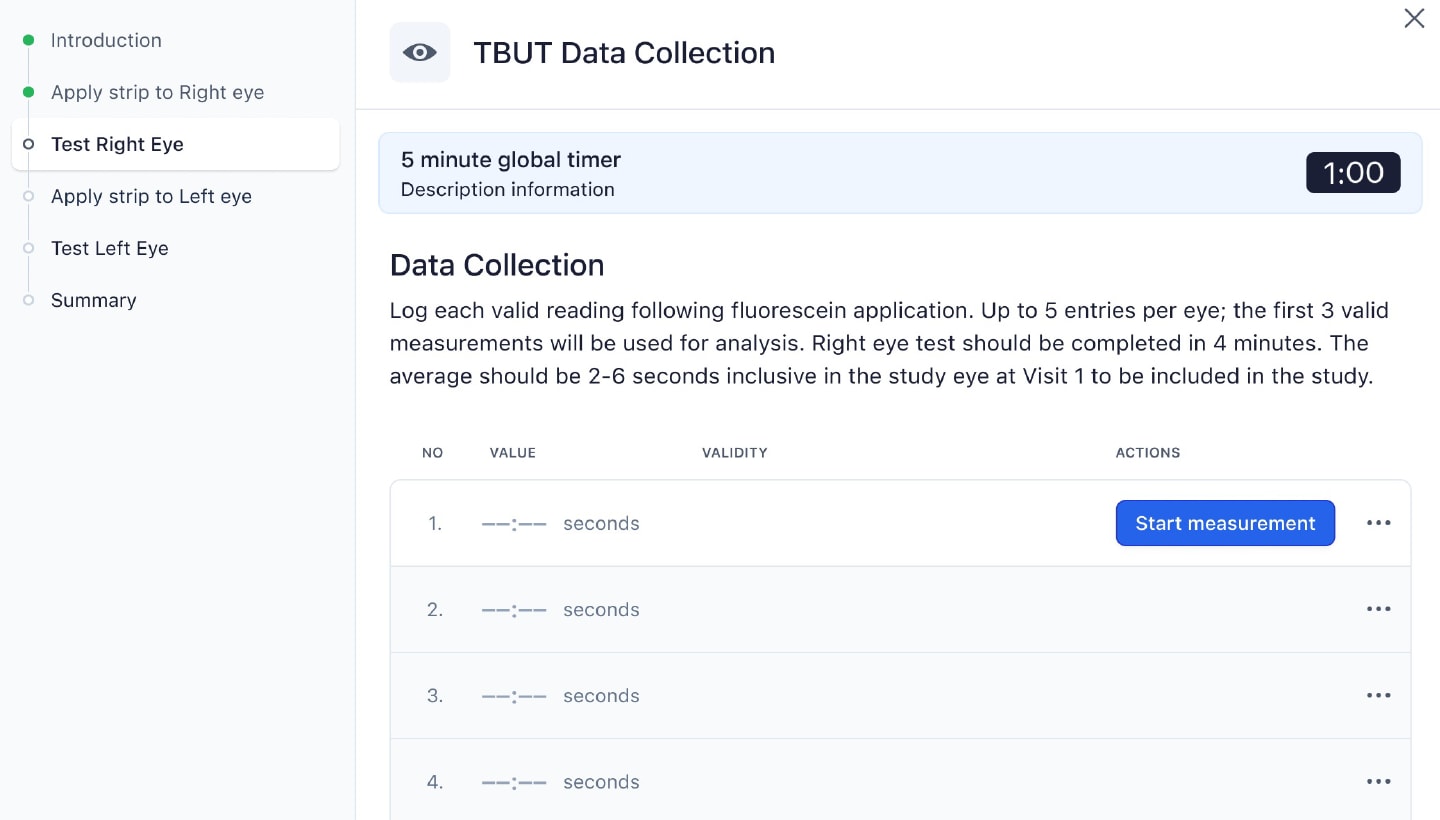

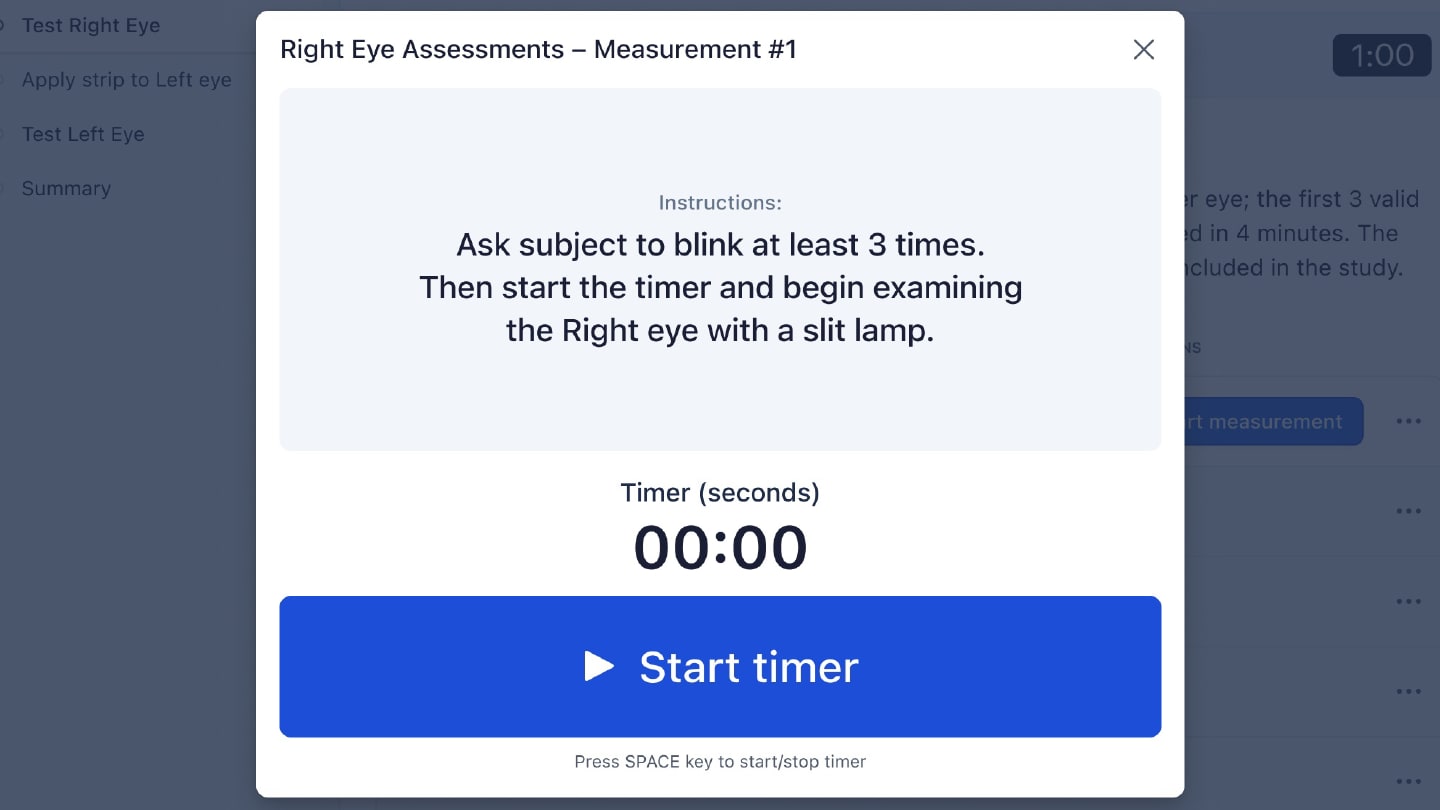

For example, for TBUT (Figure 1), the investigator is first tested on the basics of the method with an exam. They are then guided through the workflow system for participant testing. The system initiates a countdown after the investigator applies fluorescein, which standardizes the amount of time between fluorescein application and evaluation. The system furthermore prohibits grading until the required time has passed (the “tear settling period”), and records if the investigator takes too long to complete the evaluation, automatically triggering a protocol deviation (if required). The system likewise tracks how many TBUT replicates have been completed and how many were discarded because of poor quality. Sponsors and contract research organizations (CROs) can now more easily know if sites are following the protocol, and they can use these data to better design their next trial.

Workflow for Tear Break Up Time Data Collection

Just as important, training is no longer a separate slide deck delivered at an investigator meeting. Instead, training simulations happen inside the same modules that investigators will use during the trial. This reduces cognitive switching and ensures that everyone is getting the same information. The system includes built-in knowledge checks and performance tracking, so sponsors and CROs can see which investigators are proficient and which investigators need additional training before they are allowed to see study participants.

Protocol deviations and the cost of delay

Multi-system integration has downstream impact beyond the visit. It is not uncommon for some sites to rack up hundreds of protocol deviations. Often, it is small errors (timing, missing steps, transposition errors, etc.) that progressively add up to become major time and cost issues. They delay database lock, introduce noise, and strain the entire team at crunch time.

Without real-time validation, many of these deviations go undetected until final monitoring or reconciliation. At that point, it is often an “all hands on deck” situation to deliver the study on time. With guided workflows, sites are starting to prevent these deviations upstream, reducing cleanup downstream and frustration.

Designing trials with the next study in mind

Another critical benefit is the feedback loop this approach creates. Because data is captured in real-time and includes granular timing and usage metadata, we are able to see patterns across sites and studies. This allows for future studies that are more streamlined and more likely to result in meeting the defined endpoints while also making everyone’s lives easier.

Historically, such insights have been anecdotal at best. By digitizing and better centralizing endpoint collection, we are surfacing site-level and procedure-level inefficiencies that would otherwise remain invisible.

Integration is foundational

One of the persistent challenges in study startup is system overload. Sites are often handed five or more platforms (e.g., Electronic Data Capture [EDC], Electronic Patient-Reported Outcome [ePRO], Clinical Trial Management System [CTMS], Interactive Response Technology [IRT], Trial Master File [TMF]) and expected to keep track of each system's purpose, password, and user interface. This is not just inconvenient – it increases site burden and decreases compliance.

We believe the solution is to consolidate the entire stack, including data source documents, EDC, TMF, ePRO, IRT, CTMS, and training, into a single system. This would allow AI, in combination with human experts, to automate work across systems, enabling dynamic protocol enforcement, intelligent form configuration, and faster study startup.

Automation, AI teammates, and the next frontier

We believe the next step is to focus on expanding the capabilities of AI for clinical research. From automatic inclusion/exclusion checks to real-time participant eligibility summaries, there is a clear path to making trials faster, cheaper, and more accurate without compromising rigor.

We believe in automation with oversight, keeping humans closely in the loop. AI can remove administrative burden but not replace clinical judgment. When the alternative is manual paperwork and subjective measurement, the case for digitization becomes not just compelling, but urgent.

As trials become more complex and endpoints more sensitive, the industry can no longer afford workflows designed in the 1980s. Modern science demands modern operations. And modern operations demand integrated, intelligent systems that meet the moment.